Use of Machine Learning Techniques to Determine Trends in Pediatric Orthopaedic Literature

Andrew Rowley M1, David C Sing2, William R Barfield1, Robert F Murphy2 and James F Mooney3,*

1Department of Orthopaedics, Medical University of South Carolina, USA

2Department of Orthopaedic Surgery, Boston University SOM, USA

3Department of Orthopaedic Surgery, Atrium Health Wake Forest Baptist, USA

Received Date: 02/11/2022; Published Date: 21/11/2022

*Corresponding author: James F Mooney, III, MD, Department of Orthopaedic Surgery, Atrium Health Wake Forest Baptist, USA

Abstract

Machine Learning (ML), a form of artificial intelligence, has been used to determine trends in multiple scientific areas. There are no published demonstrations of the utilization of ML methods to analyze Pediatric Orthopaedic literature.

All abstracts and article titles published in the Journals of Pediatric Orthopaedics A&B from 2000-2020 were analyzed using Natural Language Processing (NLP), which is a form of Machine Learning. A specific model of NLP (Latent Dirichlet Allocation, or LDA) was utilized to delineate 50 groups of often-associated terms (words), which are defined by NLP as “topics”. Goals of the study included ranking the “Topics” from highest to lowest prevalence and by the number of associated abstracts published within JPO A&B during the period reviewed. In addition, the title of the best-fit abstract was to be determined by the model, and the 3 topics that trended with the greatest (hottest) and least (coldest) activity over time were to be identified.

A total of 5145 abstracts were analyzed, and 50 topics were generated. All topics were ranked by prevalence, and the number of abstracts associated with each topic was noted. The 3 topics that trended “Hottest” and “Coldest” over the study period (p < 0.001) are presented graphically.

This project demonstrates the feasibility of the application of Machine Learning to Pediatric Orthopaedic literature. Routine use of algorithm-based tools may minimize human error and bias that can be inherent in large and tedious reviews. The potential benefits of these types of processes could be significant in the future.

Introduction

In 2017, Sing et al described the initial use of “Machine Learning” to discover, assess and rank thematic topics published in Spine-related literature sources over a specific period of time [1]. Even in the relatively short time since that publication, computer processes and artificial intelligence methods used in that study have evolved and become more accessible. In light of these changes, we hypothesized that a similar review could be focused on journals that are exclusively sources of Pediatric Orthopaedic literature. Within the English-language literature, the Journal of Pediatric Orthopaedics (Editions A and B) (JPOA&B) are focused only on Pediatric Orthopaedic Surgery subjects.

The goals of this project were to use the most current machine learning methods to determine thematic topics published in JPOA&B, to rank these topics from most to least common, as well as to document changes in the activity of these topics over the time period in question. Finally, we planned to use the same techniques to identify specific articles that matched most closely to these topics. By doing so, we hoped to demonstrate how modern, widely available machine learning methods can provide a novel way to analyze a segment of the pediatric orthopaedic literature. We hypothesized that these techniques could be used to document changes in focus and research interest over an extended period of time within two journals that are sources of exclusively Pediatric Orthopaedic information.

Materials and Methods

Analysis of the literature published in JPO A&B from 2000-2020 was performed using LDAShiny, [2] an open-source application in R, which is free software for statistical computing. Briefly, LDAShiny carries out Latent Dirichlet Allocation (LDA) to perform topic modeling. LDA is a statistical model that examines a body of text and assumes that a document is a mixture of “topics”, and that each topic is characterized by a distribution of words [3]. For example, LDA will discover “terms” (words or word stems) that occur together frequently in a large collection of documents, and are considered topics. Assignment of a meaningful label to an individual topic is up to the user, and often requires a specialized fund of knowledge regarding the subject matter being analyzed [4].

LDAShiny provides an interface for researchers to harness LDA and machine learning’s ability to examine large amounts of scientific literature. To import data into LDAShiny, Scopus (Elsevier. Amsterdam, The Netherlands), an abstract and citation database, was used to download information regarding articles from JPO-A and JPO-B for the years 2000-2020. Another journal that focuses exclusively on Pediatric Orthopaedics (the Journal of Children’s Orthopaedics) was excluded because it did not begin publication until 2007, which the authors felt added an unnecessary level of potential variability to the analysis.

The information exported from Scopus for each article included author(s), year published, title and abstract. Only research articles were included in the search. Reviews, letters, and editorials were excluded manually. This search yielded 5,145 total abstracts for analysis. As Scopus only allows for 2,000 articles to be exported at a time, articles were downloaded in segments as .csv files and then combined into a single .csv file via R for upload into LDAShiny.

Before running the model, preprocessing was performed on the data. Terms with no analytic value (“Stop Words” such as “and”, “for”, and other pronouns and articles pre-determined by the program) were excluded from analysis [5]. In addition, the authors manually excluded words that were felt to have little value in the analysis, such as “pediatric” and “study” (Table 1), as well as a list of words excluded in the article by Sing, et al [1]. Next, “stemming” was performed by LDA on the text, which combined words into single stems (eg, “performed” and “performs” into “perform”). A sparsity value of 0.995 was chosen by the authors, meaning that words appearing in less than 0.5% of articles were excluded. In the end, a document term-matrix was created which yielded 1,780 terms for analysis, reduced from 27,358 terms originally imported into the program.

The LDA model was performed on the document term matrix. We chose a goal of determining 50 topics (k), utilizing 10 terms (words) per topic, which is the default option for the program. 1000 iterations of the model with a burn-in value of 100 were chosen, based on the recommendations of the creators of LDAShiny. An alpha-value of 1 was chosen, again based on the recommendation of the creators that alpha is set at 50/k. The modeling was performed and subsequently analyzed.

Information yielded included the 50 topics sought, the trending of each topic (“hot” or “cold”), and the best-fitting paper from the source journals for each topic. To determine the trends of topics, regression slopes are computed for each topic based on the proportion of a topic in each year. Topics with a positive regression slope at a statistical significance level (p<0.05) were considered as increasing in interest, or “hot”, while topics with a negative regression slope at a statistical significance level were considered to be declining in interest, or “cold”. If the regression slopes were not significant, the topics were classified as “fluctuating”. The most popular and least popular topics were determined via the prevalence score calculated by the model.

The numbers of abstracts belonging to each topic were calculated manually by the authors utilizing the prevalence score and the total number of abstracts analyzed. In addition, LDAShiny assigns abstracts to each topic based on the similarity of that abstract to a topic, generating a theta score. The theta score was was used to identify the best-fitting JPO A or B article for each topic. As no actual patient information was utilized, Institutional Review Board evaluation and approval were not required for this study.

Table 1: Tabular listing of all words/stems removed manually by the authors as potential terms.

Results

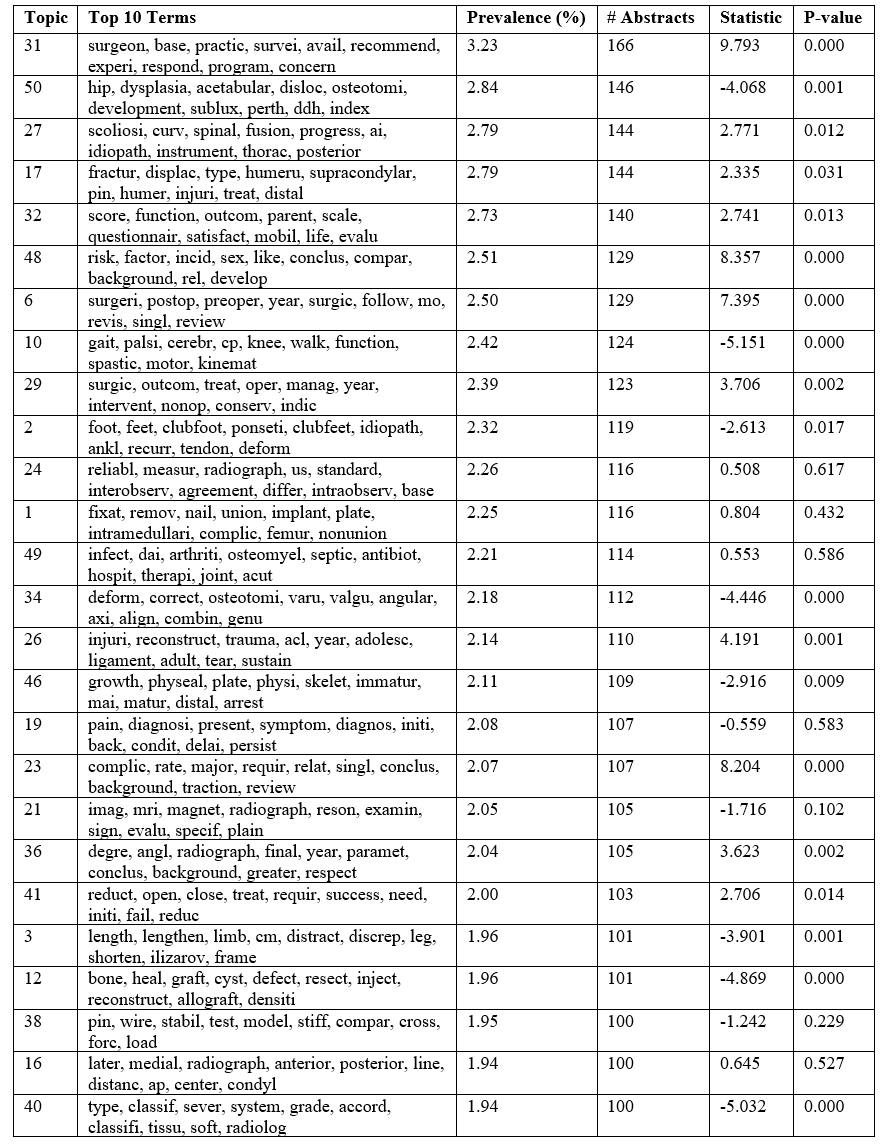

The 50 topics generated are listed in descending order of prevalence, along with the number of abstracts that correlated with each topic, in Table 2. Tables 3 and 4 are focused on specifically illustrating the 10 topics with the greatest and least numbers of abstracts from the study period that respectively associated with those topics (Table 2,3,4).

To document trends in these Pediatric Orthopaedic literature sources over time, we determined the 3 topics with the most significantly positive trends (“Hottest”) and those with the most statistically significant negative trend data (“Coldest”) over the time period studied. These are represented graphically in Figure 1. It is important to note that these did not necessarily correlate with the most or least common topics over time. In fact, while 2 of the “Hottest” topics (31 and 48) were among the most published, topic 10 had a very high number of associated publications, yet was statistically one of the 3 “coldest” over the period examined.

In addition to the generation of topics and assessment of trends in these topics over time, application of the LDA model allowed determination of the representative article from the source journals that fit most closely (or had the highest “Theta score”) with each topic. We have chosen to list only those associated with the top and bottom 10 most prevalent topics. These results are presented in Tables 3 and 4.

Table 2: All 50 topics discovered by application of the LDA program listed in order of prevalence.

Table 3: Top 10 (Most Published) topics with number of abstracts for each topic and “best-fit” article title for each topic.

Table 4: Bottom 10(Least Published) topics with number of abstracts for each topic and with “best fit” article title for each.

Discussion

Evaluation of large volumes of published medical literature, in an attempt to understand how specific characteristics have changed over time, has become increasingly common. Examples include efforts to determine and identify “classic” or highly cited references in subspecialty areas of Orthopaedic Surgery, and assessment of the changing contributions to the Orthopaedic and Traumatology literature originating from specific countries of origin over a period of time [6,7]. In addition, researchers have investigated trends in author gender in high impact journals, as well as attempted to determine trends in the numbers and types of articles published in certain specialty publications over a fixed period [8,9].

Machine learning is a form of artificial intelligence that involves the use of algorithms that give computers the ability to learn without specific human input of rules or instructions that may potentially introduce bias. Speech and image recognition activities, as well as self-navigating vehicles and facial recognition programs that are used daily, are all examples of the extension of machine learning and artificial intelligence into modern life. Thus far, reports of orthopaedically-related clinical applications of ML include use in the process of scoliosis diagnosis and in the assessment of bone age [10,11].

One of the initial descriptions of ML to analyze a large volume of scientific text was published by Griffiths and Steyvers in 2004 [12]. The authors used these methods to mine the abstracts published in the Proceedings of the National Academy of Science (PNAS) from 1991 to 2001 to extract a set of topics from those abstracts. These topics were then analyzed to determine relationships between scientific disciplines and assess trends in the topics over time.

Sing, et al utilized further adaptations in ML (using the LDAShiny tool) to review orthopaedic subspecialty literature in 2017 [1]. Their goal was to apply available ML computing techniques to assess the Spine-related literature published from 1978-2015. This allowed the authors to mine a large volume of text from abstracts in an unbiased fashion to discover thematic topics and identify 100 research topics within the spine literature, and then to determine “hot” and “cold” trends during that time period. The authors felt that the ability to examine large volumes of text, while minimizing the risk of bias or human error, could lead to improvements in the processes of determining and updating clinical guidelines, as well as provide direction for future research. They recognized that the process of labeling the topics, or associating them with specific clinical situations or activities, did require further input from those with specialized knowledge [1].

While a detailed description of the computer science and mathematics upon which these techniques are based is beyond the scope of this current report, it is important to highlight and describe the concept of the “Topic” which is the fundamental finding reported by this type of analysis. As noted in the Materials and Methods section of this paper, thematic topics can be thought of as combinations of words that have a high tendency or likelihood of appearing together within a segment of text. These topics can then be analyzed for increasing or decreasing popularity over time, and thus topic trends can be viewed over time. Finally, the abstract/paper that matches the topic in question most closely can be identified through a best-fitting process.

We used a readily available form of machine learning (LDAShiny) to analyze text in a manner similar to that reported by Sing, et al, and focused specifically on data published over a twenty-year period in two journals with an exclusive focus on pediatric orthopaedics [1]. Correlation of the topic trend data, associated publication numbers, and the best-fit articles generated some interesting findings and further questions. It is not a surprise that 2 of the “hottest” topics (topics 31, 48) also fall in the top 6 topics with greatest overall prevalence and number of associated abstracts during the period of study. However, it is interesting that one of the top 10 most published topics, with a best fit abstract title focused on gait in cerebral palsy patients, was noted to fall in the “Coldest” category (topic 10). Review of the graph in Figure 2 illustrates a possible explanation, in that it appears that there was a marked peak in that topic early on in the study period, and then a precipitous drop-off, or negative slope, over time. This specific finding may suggest that there is an opportunity for more detailed analysis of sources of literature that investigates orthopaedic care for patients with cerebral palsy.

Another interesting finding includes the trend data of topic 23, which correlated most closely with an abstract regarding surgical complications, and was strongly positive to the point of reaching the top 3 “Hottest” category. However, the overall number of associated abstracts over time was not sufficient to warrant a position in the overall top ten listing by prevalence. Finally, the fact that a topic (topic 31) appearing to have little relation to direct patient care or management was found to have the greatest number of associated abstracts, and was amongst the “Hottest” of the topics of the study, seems deserving of further investigation.

As noted by other authors [1], the topics generated can be labeled, or associated, with specific clinical issues. For example, topic 27 is clearly associated with surgical management of adolescent idiopathic scoliosis, while topic 32 involves studies that investigate the measurement of patient outcome data in orthopaedic surgery. The best-fit article, as determined by LDA, for each topic can be a helpful guide, but in many cases this decision-making requires specialized knowledge of the subject matter being analyzed. However, correlation of these topics to a specific clinical situation or label requires input from those within the specialty field, which can be affected by bias or error.

There are potential limitations to this study. One of the most obvious is the relative lack of familiarity and facility of most pediatric orthopaedic clinicians (the primary audience for the journals reviewed) with the computing methods and technologies employed in generating the data used. The authors hope that they have clarified the terminology (such as topics) sufficiently. In addition, there is the concern expressed by Sing, et al [1] that one of the limitations of this type of an approach to the literature is that it is not possible to factor in any concerns or issues with the quality of the journals or publications undergoing analysis. While that may be an issue in some fields, we do not share a similar concern regarding the sources of the abstracts reviewed for this investigation due to the reputation of the source journals within the field of Pediatric Orthopaedics. We acknowledge that many articles regarding Pediatric Orthopaedics are published in journals other than JPO A&B. However, the authors felt that the process of manually identifying articles from non-Pediatric Orthopaedic-specific sources by title could have been an unnecessary source of bias or error. As such, the literature sources were limited to review of JPO A&B in an attempt to minimize that potential issue.

Finally, despite the fact that we actively edited and added multiple “Stop Words” (Table 1) that were beyond those chosen by the program itself, it may be that we should have been more liberal with word elimination. Whether greater selectivity would have pushed the topics toward a point at which they were more recognizable or more easily associated by clinicians with obviously clinical situations will require further evaluation.

Overall, these results are particularly interesting, and could prove to be a valuable starting point for the use of these methods to analyze the Pediatric Orthopaedic literature over periods of time. It is a very different way to look at the literature, but the use of an algorithmic-based program has the potential benefits of limiting human bias or error in the process. We concur with Griffiths and Steyvers [12] that the ability to determine topics that are either “hot” or “cold”, and the ability to do it in an objective fashion, is one of the most important applications of these processes. Our analysis, specifically the trending of the topics, raises questions that may warrant further investigation.

We believe that this effort is important in that it demonstrates what is currently technologically possible, and hints at the level of information that could be determined through use of these rapidly evolving techniques in the future. The advantages of generating and using this type of information, which should minimize the potential for human error or bias, is obvious. We agree with Sing, et al [1] that these techniques could improve the processes of formation and updating of clinical practice guidelines, as well as help guide the directions of future research. Finally, Bashir et al reported that the majority of systematic reviews that have been published are not updated to reflect new evidence [13]. Machine learning methods, similar to those used in this demonstration, could be utilized to analyze and re-analyze large amounts of published data to keep up with new studies and ensure that recommendations and clinical care guidelines reflect all available data.

Figure 1: Graphs depicting the 3 “Hottest” and “Coldest” topics over the period reviewed.

Conclusion

This study demonstrates the first use of machine learning techniques to review exclusively Pediatric Orthopaedic literature, and demonstrates what can be done at this time. These methods provide a novel way to determine topics and document trends in the literature in a manner that appears to minimize the effects of human error and/or bias on the process. Our review of 20 years of Pediatric Orthopaedic literature utilizing these techniques provides interesting, but in many ways confusing, results. Much of the confusion may be a result of the use of technology and terminology that is new and unique for most in clinical medicine. Future applications of these artificial intelligence techniques could be far reaching in multiple areas of academic and clinical medicine, including Pediatric Orthopaedics, but may be limited by the novelty and underlying complexity of the mathematical and computational systems.

Disclosure: The authors have no disclosures

Funding: No external funding was used to support this activity

References

- Sing DC, Metz, LN, Dudli S. Machine learning classification of 38 years of spine-related literature into 100 research topics, Spine, 2017; 42(11): 863-870.

- De la Hoz MJ, Fernandez-Gomez MJ, Mendes S. LDA Shiny: An R package for exploratory review of scientific literature based on a Bayesian probabilistic model and machine learning tools. Mathematics, 2021; 9(14): 1671. DOI: https://doi.org/10/3390/math9141671.

- Blei DM, Ng AY, Jordan MI. Latent dirichlet allocation. J. Mach. Learn. Res, 2003; 993-1022.

- Asmussen CB, Muller C. Smart literature review: A practical topic modeling approach to exploratory literature review. J. Big Data, 2019; 6: 93.

- Benoit K, Muhr D, Watanabe K. Stopwords: Multilingual Stopword Lists, R package version 0.9.0; R Foundation for Statistical Computing: Vienna, Austria, 2017.

- Dartus J, Saab M, Erivan R, et al. Bibliometric evaluation of orthopaedics and traumatology publications from France: 20-year trends (1998-2017) and international positioning. Orthop Traumatol Surg Res, 2019; 105: 1425-1437.

- Varghese RA, Dhawale AA, Zavaglia BC, et al. Citation classics in pediatric orthopaedics. J Pediatr Orthop, 13(6): 667-671.

- Hiller KP, Boulos A, Tran MM, et al. What are the rates and trends of woman authors in three high impact orthopaedic journals form 2006-2017? Clin Orthop Relat Res, 2020; 478: 1553-1560.

- Mimouni M, Cismariu-Potash K, Ratmansky M, et al. Trends in physical medicine and rehabilitation publications over the past 16 years. Arch Phys Med Rehabil, 2016; 97(6): 1030-1033.

- Chen K, Zhai Z, Sun K, et al. A narrative review of machine learning as promising revolution in clinical practice of scoliosis. Ann Transl Med, 2021; 9(1): 67.

- Dallora AL, Anderberg P, Kvist O, et al. Bone age assessment with various machine learning techniques: a systematic literature review and meta-analysis. PLOS ONE 2019. DOI: https://doi.org/10.1371/journal.pone.0220242.

- Griffiths TL, Steyvers M. Finding scientific topics. PNAS, 2004; 101(sup 1): 5228-5235.

- Bashir R, Surian D, Dunn AG. Time-to-update of systematic reviews relative to the availability of new evidence. Systematic Reviews, 2018; 7; 195. DOI: https://doi.org/10.1186/s13643-018-0856-9.